HDFS简介

HDFS即Hadoop Distributed File System,是一个分布式文件系统,用于存储海量数据。一个HDFS集群由一个NameNode和多个DataNode组成。

HDFS特性

- 主从架构

- 分块存储

- 副本机制

- 元数据记录

- 抽象目录树

HDFS shell命令行

- Hadoop内置提供了shell命令行,格式为hadoop fs 参数。前面hadoop fs为固定搭配,表示操作的是文件系统,具体操作的文件系统则看参数中文件路径url的前缀协议。 示例如下:

[root@node1 ~]# hadoop fs -ls file:///

Found 21 items

-rw-r--r-- 1 root root 0 2021-10-26 14:27 file:///.autorelabel

dr-xr-xr-x - root root 28672 2021-10-26 15:12 file:///bin

dr-xr-xr-x - root root 4096 2020-09-11 14:44 file:///boot

drwxr-xr-x - root root 3260 2022-10-25 19:23 file:///dev

drwxr-xr-x - root root 8192 2022-10-25 19:23 file:///etc

drwxr-xr-x - root root 48 2021-10-26 15:15 file:///export

drwxr-xr-x - root root 6 2018-04-11 12:59 file:///home

dr-xr-xr-x - root root 4096 2020-09-11 14:40 file:///lib

dr-xr-xr-x - root root 24576 2020-09-11 14:40 file:///lib64

drwxr-xr-x - root root 6 2018-04-11 12:59 file:///media

drwxr-xr-x - root root 6 2018-04-11 12:59 file:///mnt

drwxr-xr-x - root root 16 2020-09-11 14:40 file:///opt

dr-xr-xr-x - root root 0 2022-10-25 19:23 file:///proc

dr-xr-x--- - root root 4096 2022-10-24 21:15 file:///root

drwxr-xr-x - root root 840 2022-10-25 19:23 file:///run

dr-xr-xr-x - root root 16384 2021-10-26 15:13 file:///sbin

drwxr-xr-x - root root 6 2018-04-11 12:59 file:///srv

dr-xr-xr-x - root root 0 2022-10-25 19:23 file:///sys

drwxrwxrwt - root root 4096 2022-10-25 19:34 file:///tmp

drwxr-xr-x - root root 155 2020-09-11 14:39 file:///usr

drwxr-xr-x - root root 4096 2020-09-11 14:46 file:///var

[root@node1 ~]# hadoop fs -ls hdfs://node1:8020/

Found 3 items

drwxr-xr-x - root supergroup 0 2022-10-24 21:14 hdfs://node1:8020/itcast

drwx------ - root supergroup 0 2021-10-26 15:20 hdfs://node1:8020/tmp

drwxr-xr-x - root supergroup 0 2021-10-26 15:23 hdfs://node1:8020/user

[root@node1 ~]# hadoop fs -ls /

Found 3 items

drwxr-xr-x - root supergroup 0 2022-10-24 21:14 /itcast

drwx------ - root supergroup 0 2021-10-26 15:20 /tmp

drwxr-xr-x - root supergroup 0 2021-10-26 15:23 /user

[root@node1 ~]# hdfs dfs -ls /

Found 3 items

drwxr-xr-x - root supergroup 0 2022-10-24 21:14 /itcast

drwx------ - root supergroup 0 2021-10-26 15:20 /tmp

drwxr-xr-x - root supergroup 0 2021-10-26 15:23 /user

shell命令行常用操作 HDFS shell命令行的常用操作基本与Linux相同。具体如下:

创建文件夹:hadoop fs -mkdir -p

[root@node1 ~]# hadoop fs -mkdir -p /study/hdfs

[root@node1 ~]# hdfs dfs -ls /

Found 4 items

drwxr-xr-x - root supergroup 0 2022-10-24 21:14 /itcast

drwxr-xr-x - root supergroup 0 2022-10-25 20:11 /study

drwx------ - root supergroup 0 2021-10-26 15:20 /tmp

drwxr-xr-x - root supergroup 0 2021-10-26 15:23 /user

- 显示文件:hadoop fs -ls -h

[root@node1 ~]# hadoop fs -mkdir -p /study/hdfs

[root@node1 ~]# hdfs dfs -ls /

Found 4 items

drwxr-xr-x - root supergroup 0 2022-10-24 21:14 /itcast

drwxr-xr-x - root supergroup 0 2022-10-25 20:11 /study

drwx------ - root supergroup 0 2021-10-26 15:20 /tmp

drwxr-xr-x - root supergroup 0 2021-10-26 15:23 /user

[root@node1 ~]# hadoop fs -put anaconda-ks.cfg /study/hdfs

[root@node1 ~]# hadoop fs -ls /study/hdfs

Found 1 items

-rw-r--r-- 3 root supergroup 1340 2022-10-25 20:14 /study/hdfs/anaconda-ks.cfg

[root@node1 ~]# hadoop fs -ls -h /study/hdfs

Found 1 items

-rw-r--r-- 3 root supergroup 1.3 K 2022-10-25 20:14 /study/hdfs/anaconda-ks.cfg

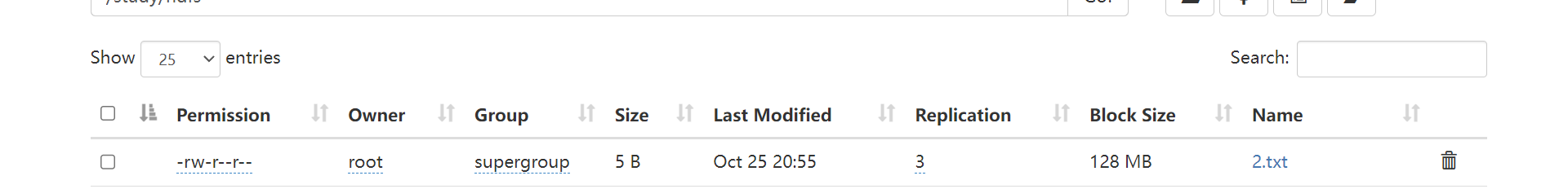

- 上传文件:hadoop fs -put

[root@node1 ~]# echo 2222 > 2.txt

[root@node1 ~]# hadoop fs -put file:///root/2.txt hdfs://node1:8020/study/hdfs

- 查看文件:hadoop fs -cat

# hadoop fs -cat /itcast/anaconda-ks.cfg

- 下载文件:hadoop fs -get

[root@node1 ~]# ll

总用量 32

-rw-r--r-- 1 root root 2 10月 24 21:15 1.txt

-rw-------. 1 root root 1340 9月 11 2020 anaconda-ks.cfg

drwxr-xr-x 2 root root 55 10月 5 00:08 hivedata

-rw------- 1 root root 23341 10月 5 00:11 nohup.out

[root@node1 ~]# hadoop fs -get hdfs://node1:8020/itcast/2.txt file:///root/

[root@node1 ~]# ll

总用量 36

-rw-r--r-- 1 root root 2 10月 24 21:15 1.txt

-rw-r--r-- 1 root root 5 10月 25 21:08 2.txt

-rw-------. 1 root root 1340 9月 11 2020 anaconda-ks.cfg

drwxr-xr-x 2 root root 55 10月 5 00:08 hivedata

-rw------- 1 root root 23341 10月 5 00:11 nohup.out

[root@node1 ~]# hadoop fs -get /itcast/2.txt ./666.txt

拷贝文件:hadoop fs -cd

追加数据到HDFS文件中:hadoop fs -appendToFile 注意!这个命令需要把集群全部打开,因为它涉及到了datanode节点,具体想了解的话可以看我上一篇博客

[root@node1 ~]# hadoop fs -appendToFile 1.txt 3.txt /2.txt

[root@node1 ~]# hadoop fs -cat /2.txt

2222

1

3

- 数据移动(重命名):hadoop fs -mv

标签:

留言评论